Changhao Chen

The Hong Kong University of Science and Technology, Guangzhou, China

I am an Assistant Professor, at the Intelligent Transportation Thrust and Artificial Intelligence Thrust, The Hong Kong University of Science and Technology (Guangzhou), China. Before that, I was a lecturer (2021-2024) at National University of Defense Technology, China, and a postdoctoral researcher (2020) at Department of Computer Science, University of Oxford. I received Ph.D. in Computer Science (2016-2020) from University of Oxford, supevised by Prof. Niki Trigoni and Prof. Andrew Markham, Master in Engineering (2014-2016) from National University of Defense Technology, and Bachelor in Engineering (2010-2014) from the Tongji University, China.

I lead the HKUST-GZ PEAK Lab (Perception, Embodiment, Autonomy and Kinematics), where our research focuses on Embodied AI and Autonomous Systems, particularly the challenges of Open-World Robotic Perception, Navigation and Interaction. Traditional robotic algorithms often depend on meticulously crafted geometric and dynamic models, which may struggle to adapt to ever-changing, complex environments. Our research demonstrates that developing learning solutions over these static models enables autonomous systems to achieve independent motion estimation, robust spatial scene perception, and reliable, safe navigation. Our work involves a combination of novel algorithms and methods (including learning and statistics, signal processing, optimization, geometry, and dynamics modelling) and system implementations (including sensor fusion, hardware-software codesign, computing architecture). Our research outcomes have been successfully applied to a diverse range of platforms, from robots, drones, self-driving vehicles to smartphone, smartwatches, and VR/AR devices, supporting their real-world applications in intelligent transportation, emergency rescue and hospital efficiency enhancement.

Our major contributions have been in the following research directions:

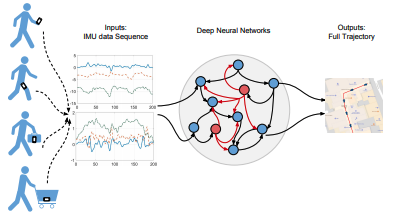

Ubiquitous Motion Tracking: we pioneer deep learning-based inertial positioning models that provide precise motion estimation and positioning in GPS-denied environments, regardless of environmental influences, including IONet, M2EIT, and MotionTransformer.

Robust Spatial Perception: our research develops novel learning and geometric methods for self-supervised learning based SLAM (e.g., SelfOdom, P2Net, DevNet), city-scale localization and rendering (e.g., AtLoc, DroneNeRF), and visually-degraded perception (e.g., Milli-Map, Milli-Ego, DarkSLAM, and ThermalLoc).

Safe Autonomous Navigation: our research develops task-driven multimodal fusion, stability-constrained dynamical modelling, and efficient policy learning, for safe robot navigation in the physical world, including SelectFusion, DynaNet and SGuidance.

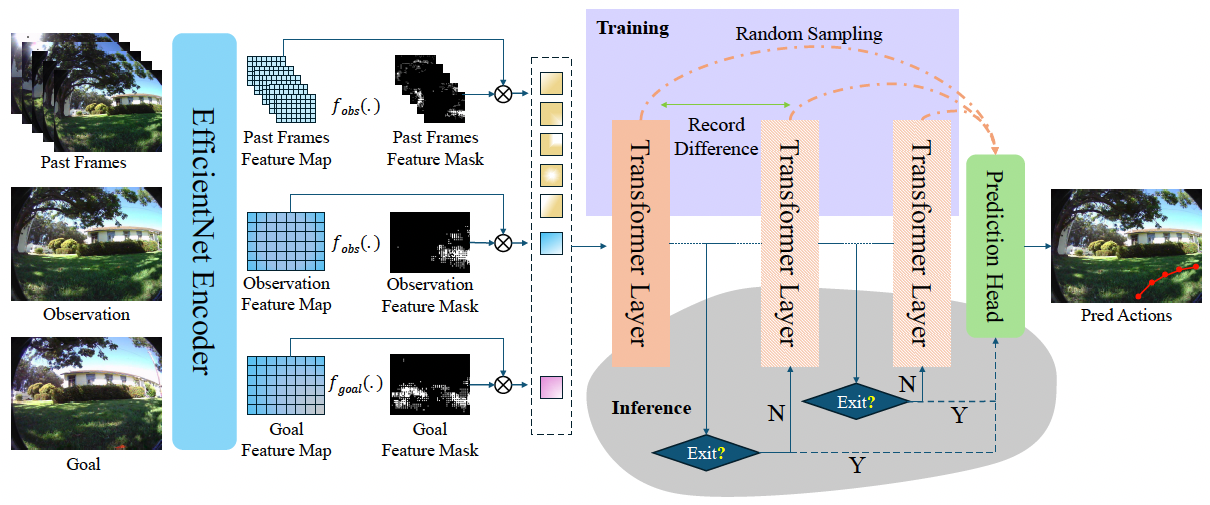

Embodied AI: our recent research focuses on efficient foundation model for robot navigation policy learning (e.g. DynaNav), and LLM based global planning.

news

| Aug 17, 2025 | We have several open positions for Spring/Fall 2026, including full-funded Ph.D. scholarships, and openings for Research Assistants, and Visiting Students! If you want to join PEAK-Lab, please read here carefully. |

|---|

selected publications

-

-

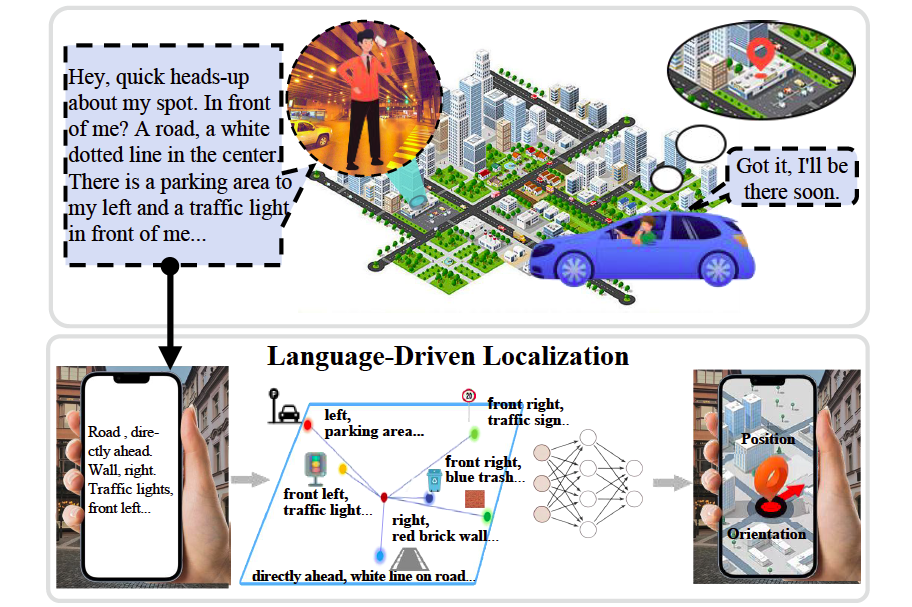

LangLoc: Language-Driven Localization via Formatted Spatial Description GenerationIEEE Transactions on Image Processing (TIP), 2025

LangLoc: Language-Driven Localization via Formatted Spatial Description GenerationIEEE Transactions on Image Processing (TIP), 2025 -

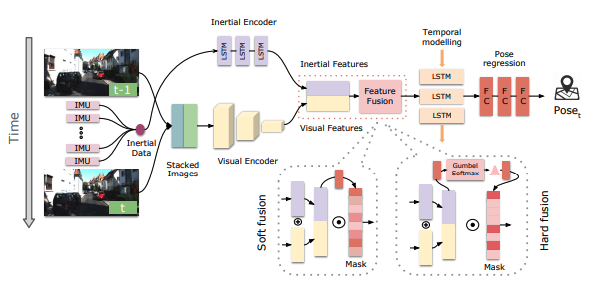

Learning selective sensor fusion for state estimationIEEE Transactions on Neural Networks and Learning Systems (TNNLS), 2022

Learning selective sensor fusion for state estimationIEEE Transactions on Neural Networks and Learning Systems (TNNLS), 2022 -

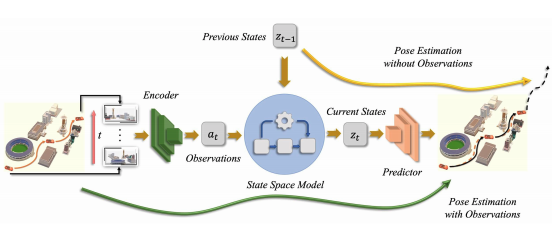

DynaNet: Neural Kalman dynamical model for motion estimation and predictionIEEE Transactions on Neural Networks and Learning Systems (TNNLS), 2021

DynaNet: Neural Kalman dynamical model for motion estimation and predictionIEEE Transactions on Neural Networks and Learning Systems (TNNLS), 2021 -

Deep Neural Network Based Inertial Odometry Using Low-cost Inertial Measurement UnitsIEEE Transactions on Mobile Computing (TMC), 2020

Deep Neural Network Based Inertial Odometry Using Low-cost Inertial Measurement UnitsIEEE Transactions on Mobile Computing (TMC), 2020